Mishearings: Hacking a tiny ASR model to write Dadaist poetry

TL;DR: I built a tool for assembling Dadaist poems from ASR transcriptions of misheard speech, and you can play with it in your web browser. I built it with MoonshineJS, Tone.js, and p5js.

Introduction

My goal lately has been to build a JS library for simple on-device speech recognition in web applications. While there are many practical uses for this, I felt the urge recently to explore its creative potential. Speech recognition models are a bit more utilitarian than purely generative models––you put speech in and get text out––so I had my work cut out for me.

We tend to think of “AI art” as a black box, where you input a prompt and get a finished product back. That’s always seemed a little boring to me––part of the fun in art is taking the time to develop a process, and seeing how that process plays out. In the realm of AI/ML art, this can mean something like fine-tuning models with meaningful datasets, or discovering new ways to tease out unexpected outputs.

So much modern art is the sound of things going out of control, of a medium pushing to its limits and breaking apart.

–– Brian Eno, A Year With Swollen Appendices

This was my line of thinking when I built mishearings, a tool for assembling surrealist poetry from real-time transcriptions of mangled speech that are generated by a Moonshine speech-to-text model running in a web browser. By inputting audio that is plausibly speech-like (without being actual speech), we get some pretty interesting “mishearings” from an otherwise run-of-the-mill ASR model. Mishearings provides a unique interface to a speech-to-text model––one that the model was not designed to handle––and invites you to assemble the strange and unpredictable output that results into your own creation. I should say that under normal circumstances, Moonshine does its job very well: both variants outperform their OpenAI Whisper counterparts while running about 5x faster. I’m eager to point this out because, well, Useful Sensors is my employer, and I helped develop the models––but I digress. I started this project by asking myself: “How can I force Moonshine to transcribe something that isn’t speech?”

Synthesizing speech-like non-speech

My first goal was to develop an interface for manipulating speech-like sound that you could stream into the model and see the results in real-time. I would provide a blank canvas with p5js that a user could draw on with their cursor. Depending on the position of the cursor, the parameters to some kind “speech generator” would change, allowing people to create interesting audio to stream into the model. With MoonshineJS, the models run very fast and are easy to integrate on the web––placing a transcriber at the end of a WebAudio pipeline and watching the results stream back is the easy part. Synthesizing speech-like non-speech to feed into the model was the hard part.

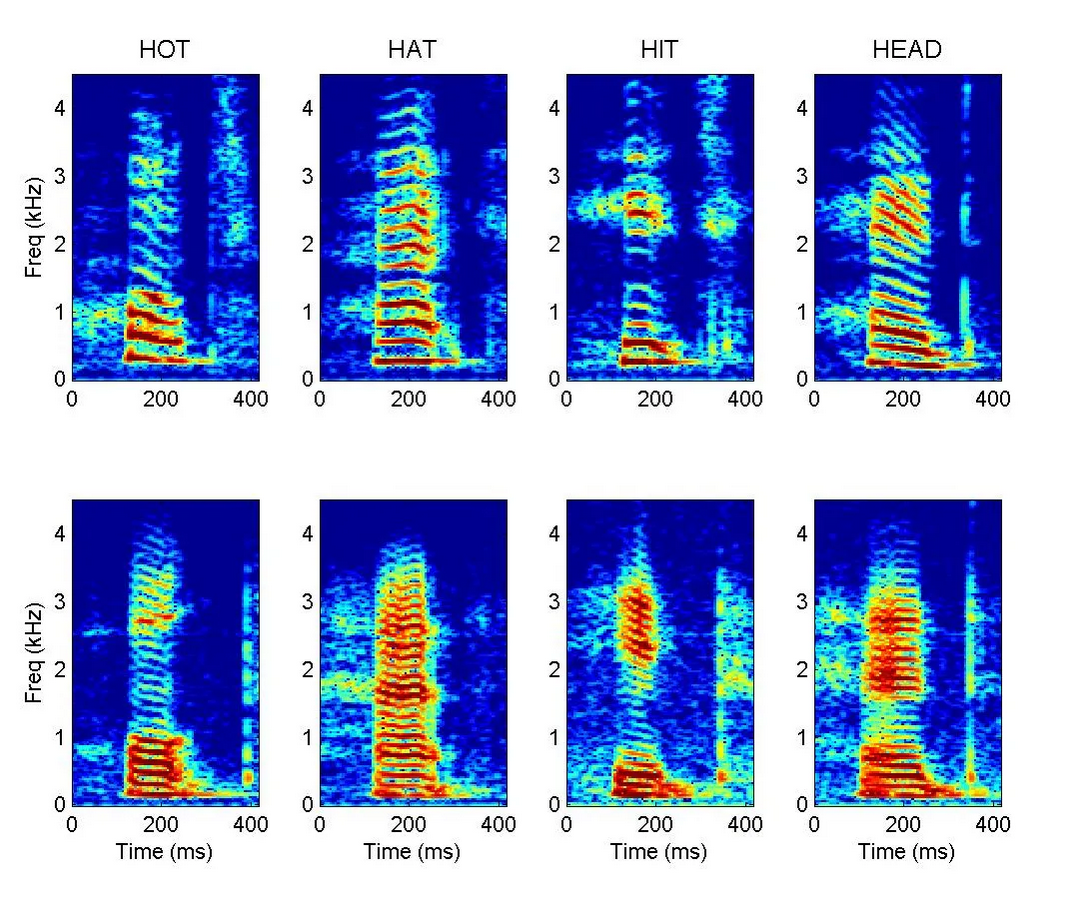

Formants visible on spectrograms. Credit: http://auditoryneuroscience.com

I started by building a formant synthesizer with Tone.js. Formant synthesis takes advantage of the fact that the human voice has distinct resonant frequency bands, called “formants,” which vary based on the speaker’s pitch and utterance. By attenuating these frequencies in an audio source (e.g., some white noise paired with a sine wave), you can synthesize sounds that resemble human speech.

I found a great article about building a web-based formant synthesizer and adapted it for my purposes. In this early version, you could control the synthesized speaker’s pitch by dragging the cursor up and down, and interpolate between the formants of different vowels by moving the cursor left and right. It took some work, sounded very cool, and completely failed at tricking Moonshine into generating transcriptions. This was a lose as far as my personal project was concerned, but also a win as far as Moonshine’s robustness is concerned. It refused to transcribe fully synthetic sounds.

Mangling speech with granular synthesis

As is often the case, my second attempt was less complicated and yielded better results. Rather than synthesizing the sound from nothing, I opted to experiment with granular synthesis. In granular synthesis, you take an input sample (e.g., an audio recording of someone speaking) and you break it down into brief, atomic snippets of sound called “grains.” You can manipulate the ordering, density, and length of the grains to create a variety of different effects.

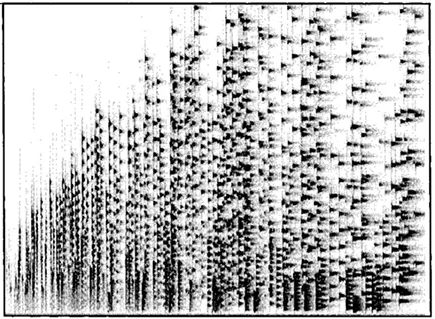

Bands of grains decreasing in density. Credit: Josh Stovall

Granular synthesis made sense: the audio sample contains the frequency content of a human voice, and the manipulation of the grains assembles the sample’s constituent parts into new patterns that resemble speech. Returning to the interface, the left-right movement of the cursor controls the grain size and the up-down movement controls the playback rate. By manipulating these parameters on a stream of speech, weird and interesting speech-like sounds are created:

When we feed this type of audio into Moonshine, it generates transcriptions—and you can’t really blame it for that. If you were instructed to pick out words in the sound sample above, you could. It’s a bit like pareidolia. If you think too hard about it, you might start reflecting on the difference between the probability distributions that models learn from data and our own brain’s ability to assemble patterns from stimuli––or on the way the model’s hallucinations in response to surreal noise resemble the disarray we might feel from information overload and the erosion of objective truth. As you play with mishearings, you might even find yourself agreeing with the model’s interpretations of nonsense.

|

|

|

|

If you want to build your own real-time, on-device speech recognition applications on the web, try out MoonshineJS. You can view the source code for mishearings on GitHub.